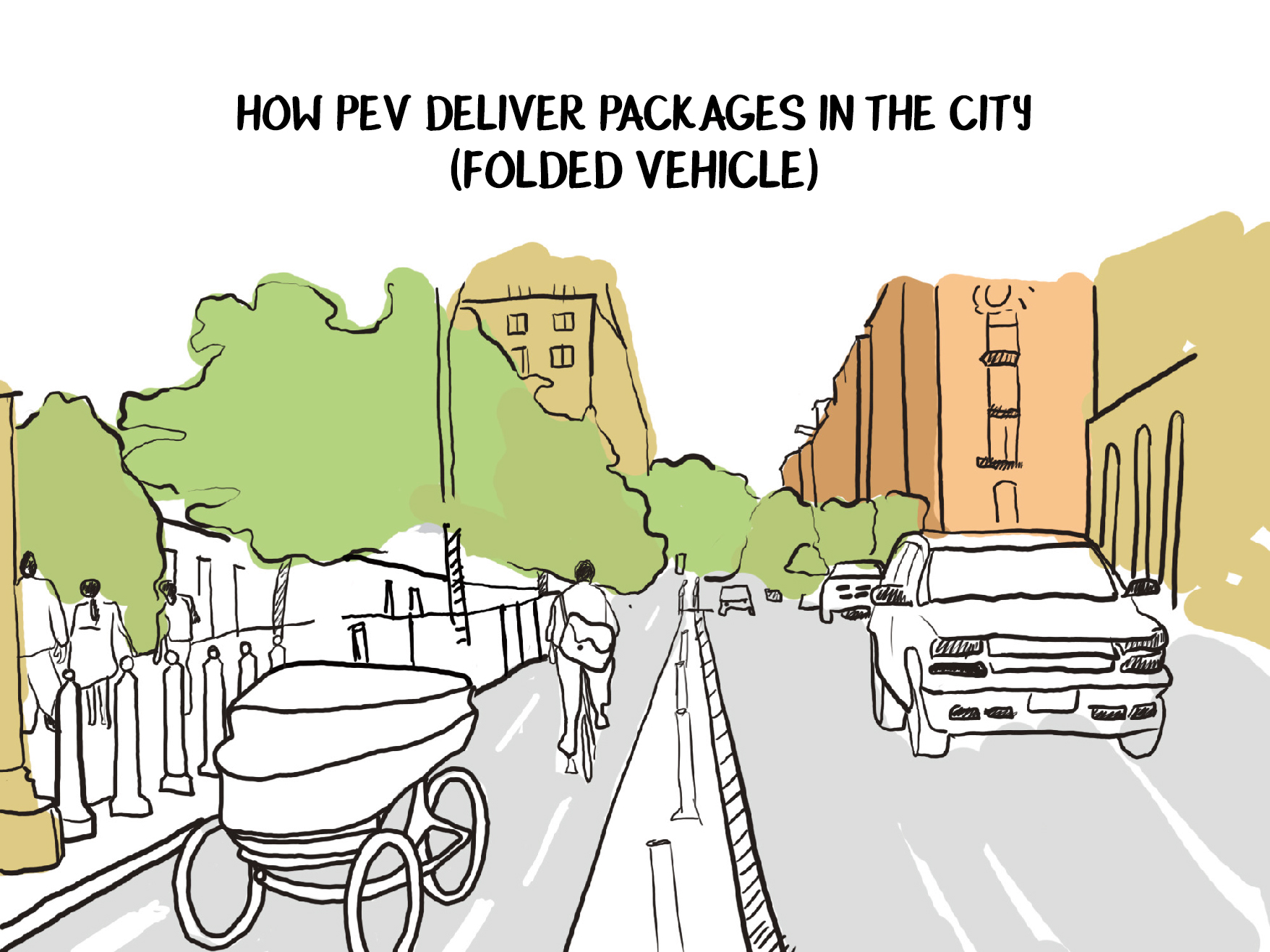

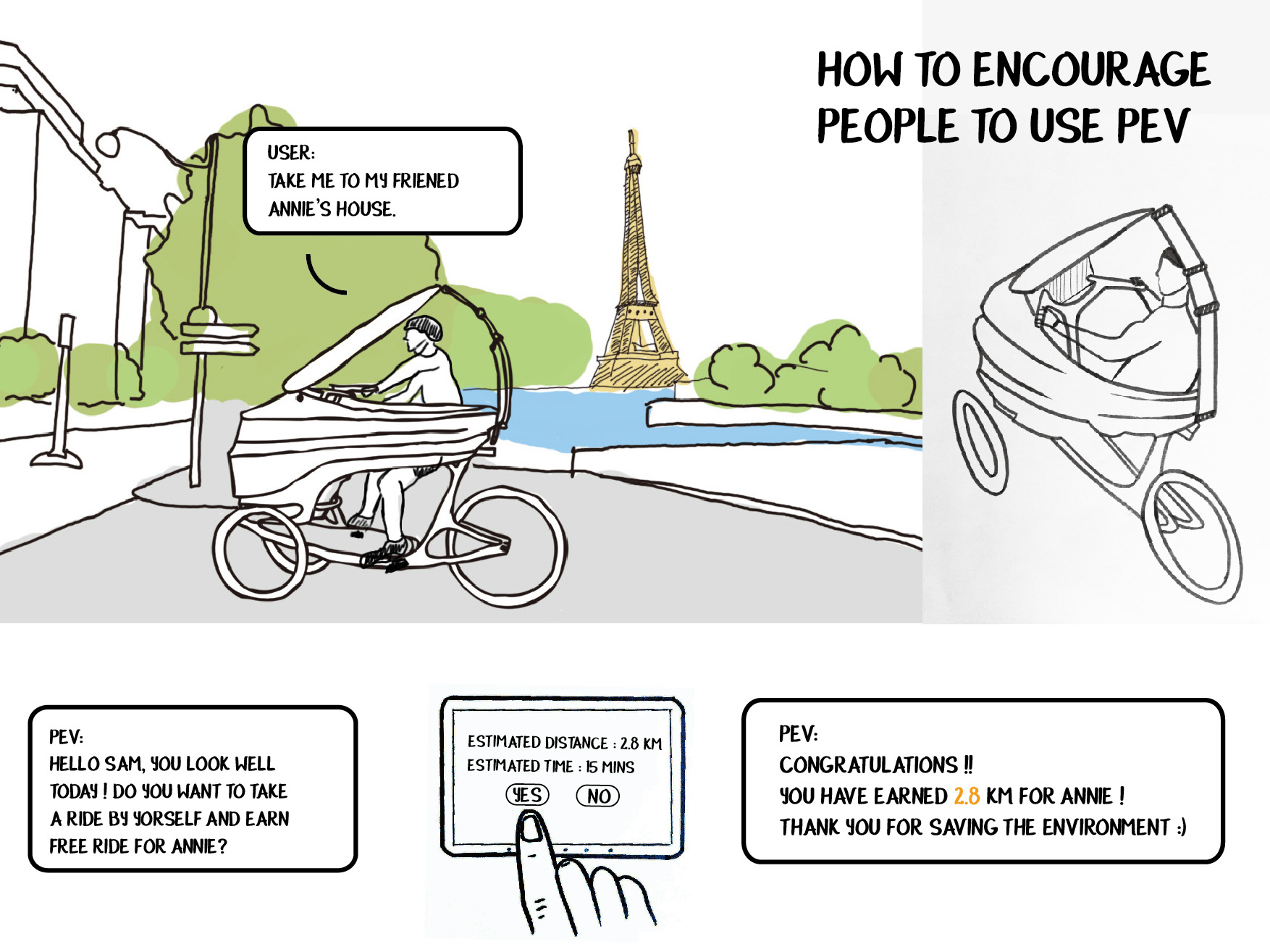

PEV (Persuasive Electric Vehicle) is a lightweight autonomous vehicle. It aims to solve urban mobility challenges with a healthy, convenient, sustainable alternative to cars. The PEV is low-cost, agile, and can be either an electrically assisted tricycle for passenger commuting or an autonomous carrier for package delivery.

I have been working on this project for four years and helped develop it from a concept to launch. I seperated the page into four parts to elaborate different stages of the product development cycle. Please select from the tabs below to see more information of each stage.

This is a joint project between the MIT Media Lab and the Denso Corporation. This is not a render, it's an actual product being commercialized by our sponsor company now! Woo-hoo 🎉🎉🎉

As a product designer in this project. I work on human-machine interface (HMI) design, industrial design, mechanical system design, and user experience design for the product.

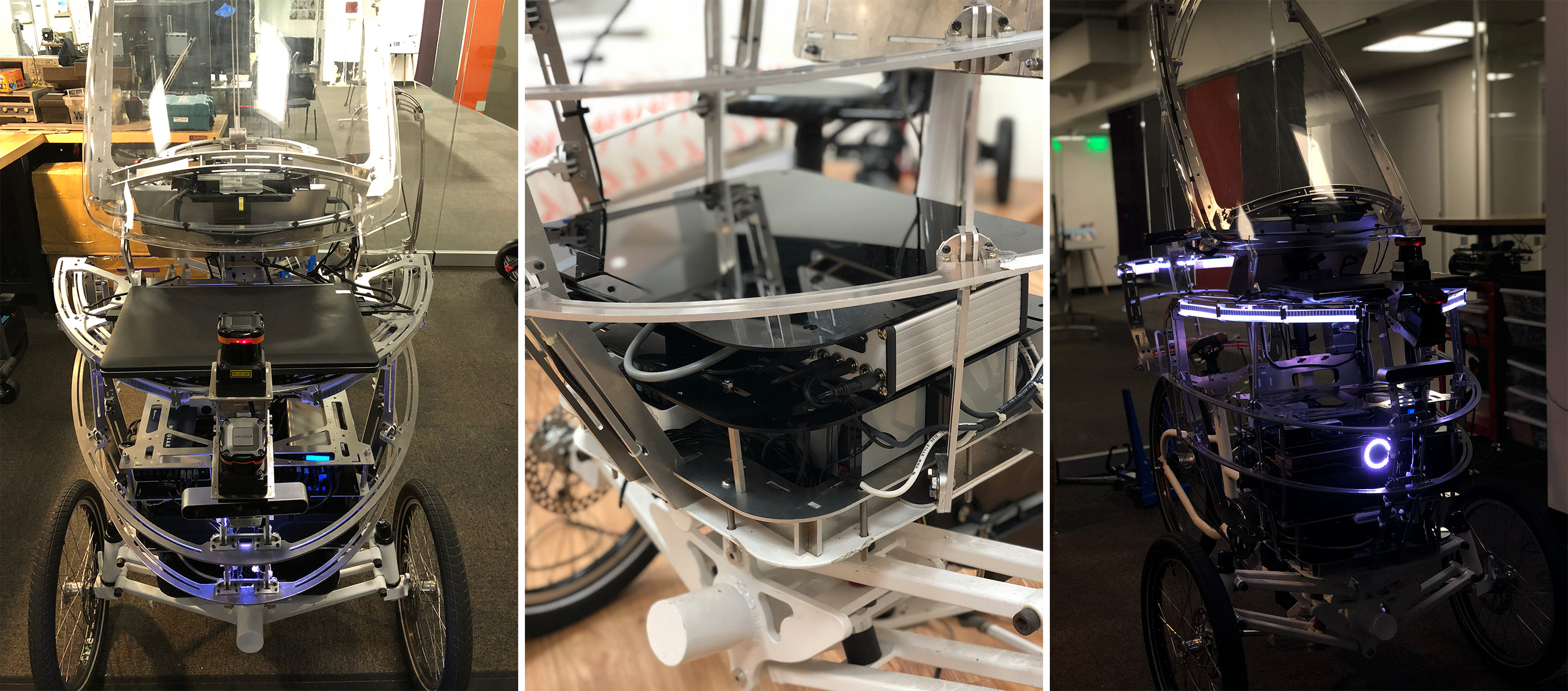

Our team built 4 different prototypes at the MIT Media Lab before the final product.

Mechanical system design, industrial design, rapid prototyping, machining, interaction design.

Rhinoceros, SolidWorks, Arduino, CNC machining, Laser Cut, Water jet, 3D printing, HTML, CSS, Javascript, ROS.

Michael Lin, Phil Tinn, Justin Zhang, Jason Wang, Vincy Hsiao, Kai Chang, Luke Jiang, Yago Lizarribar, Bill Lin, Danny Chou, Abhishek Agarwal.

Learn more from the Media Lab project website.

New modes of 21st century urban transportation are becoming more lightweight, electrified, connected, shared, and autonomous. Cohabitation of humans and machines is an increasingly important question, and one which requires careful attention and design. We strive to enable new forms of human-machine co-existence, trust, and collaboration.

PEV Eyes

PEV’s headlights point toward the pedestrians and signal them it’s safe to pass by.

Projection interaction

At night, the PEV uses projection to signal the pedestrians that it is aware of the human presence and interacts with them.

Air curtain

The curtain provides comfort to the rider and keeps mosquitos away (East-Asia needs).

Thermal seating

The seat generates cool/warm air to provide comfort.

Thermal storage

PEV utilizes the HVAC system to keep the storage cool for package delivery.

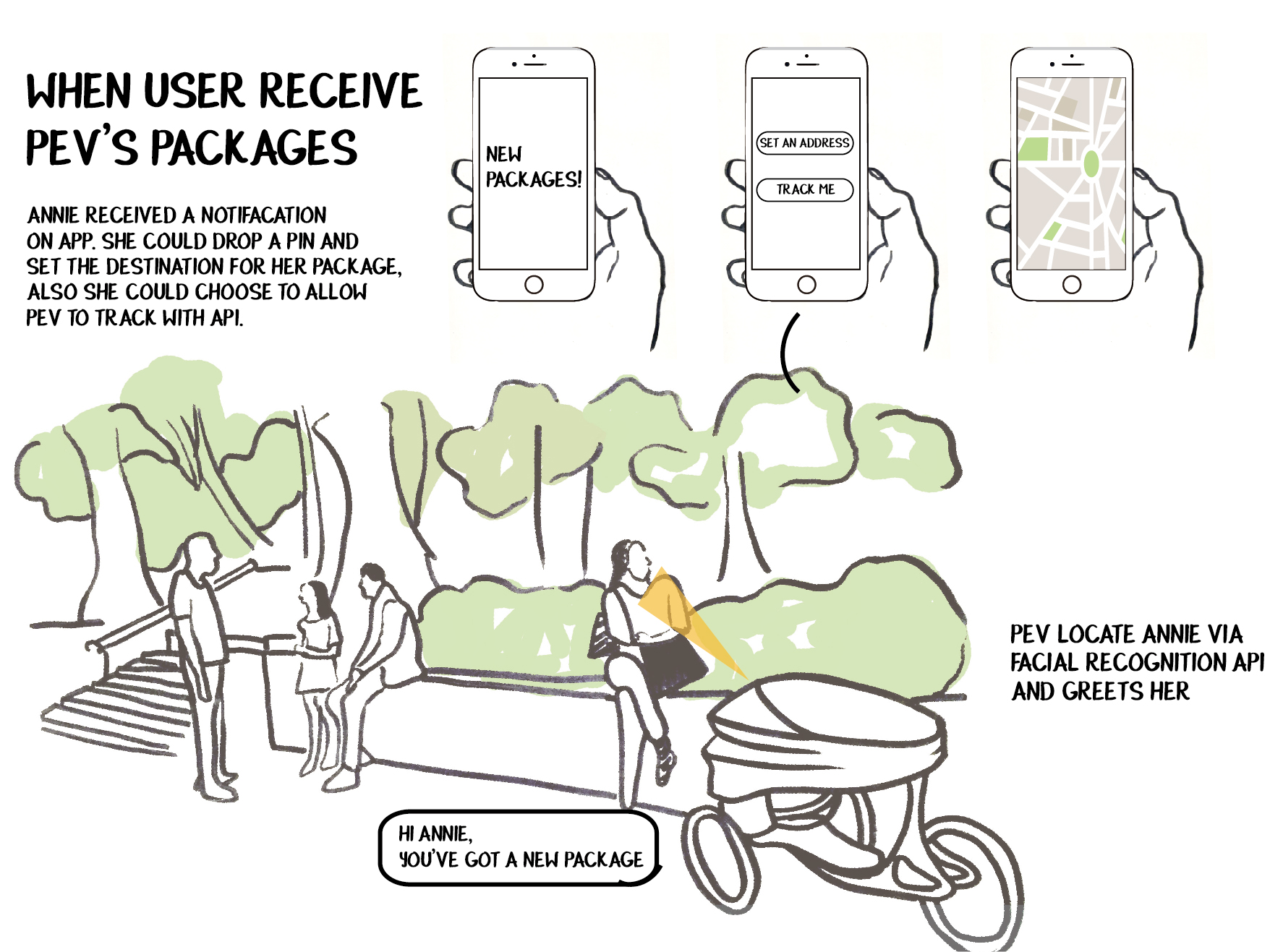

Facial recognition

The inner camera to detect if the rider is paying attention (direction of eyes and chin) to the road. If the rider is not paying attention for over 10 seconds, the seat vibrats as a signifier to pay attention.

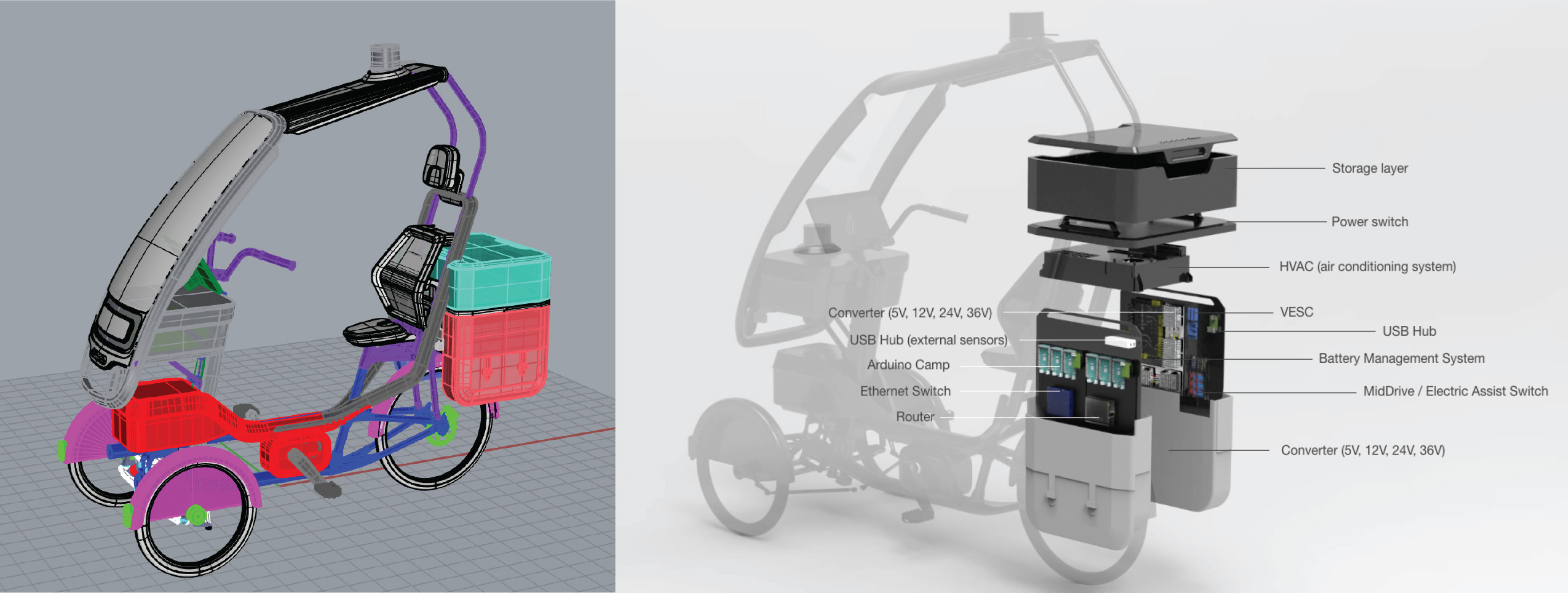

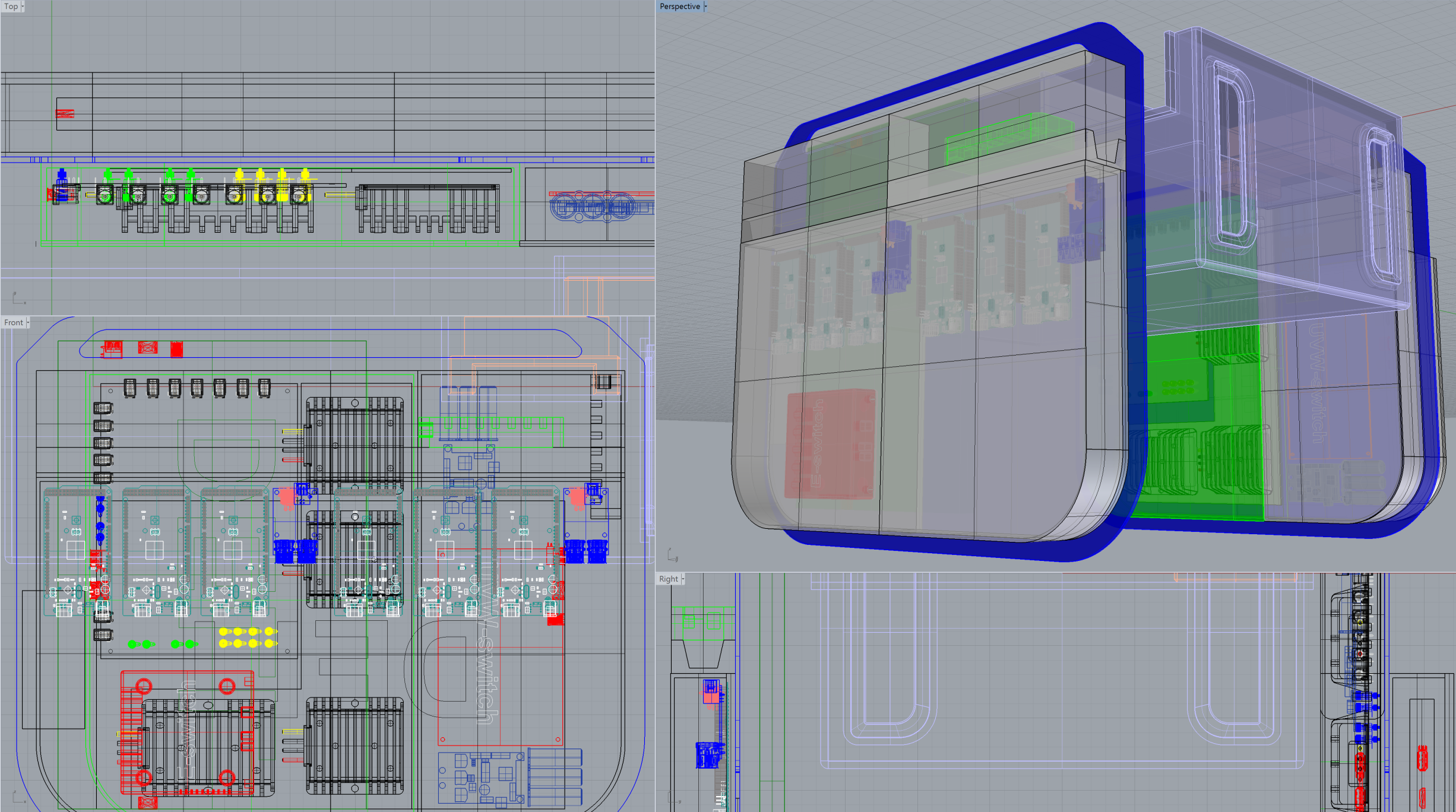

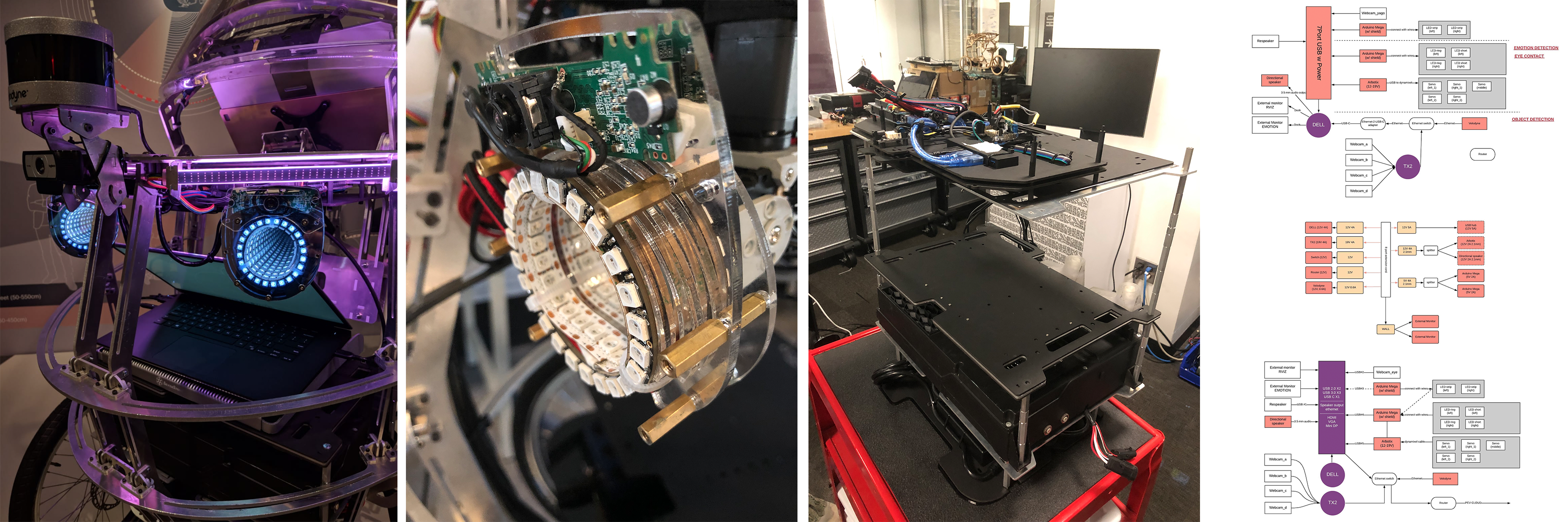

All of the above interactions were controlled by a compacted system called the Interaction Development Kit (IDK). The IDK is my Master’s thesis at the MIT Media Lab in 2019. Basically, IDK is a piece of hardware with all the necessary tools that a designer can use to prototype human-machine interfaces for a robot. It can be connected with the robot in a plug-and-play manner.

In this version of the IDK I modified the system to fit the PEV; However, it can also be modified and used for other robots. Learn more in my Master's thesis: An Interaction Development Kit to design interactions for lightweight autonomous vehicles 👨🎓!

The IDK introduces a new approach for designers and developers to designing interactions on micro-mobility systems; it provides a new tangible interface that embodies the concept of SDK and transports the concept from a virtual tool to a physical embodiment, facilitating the human-machine interface design and prototyping process.

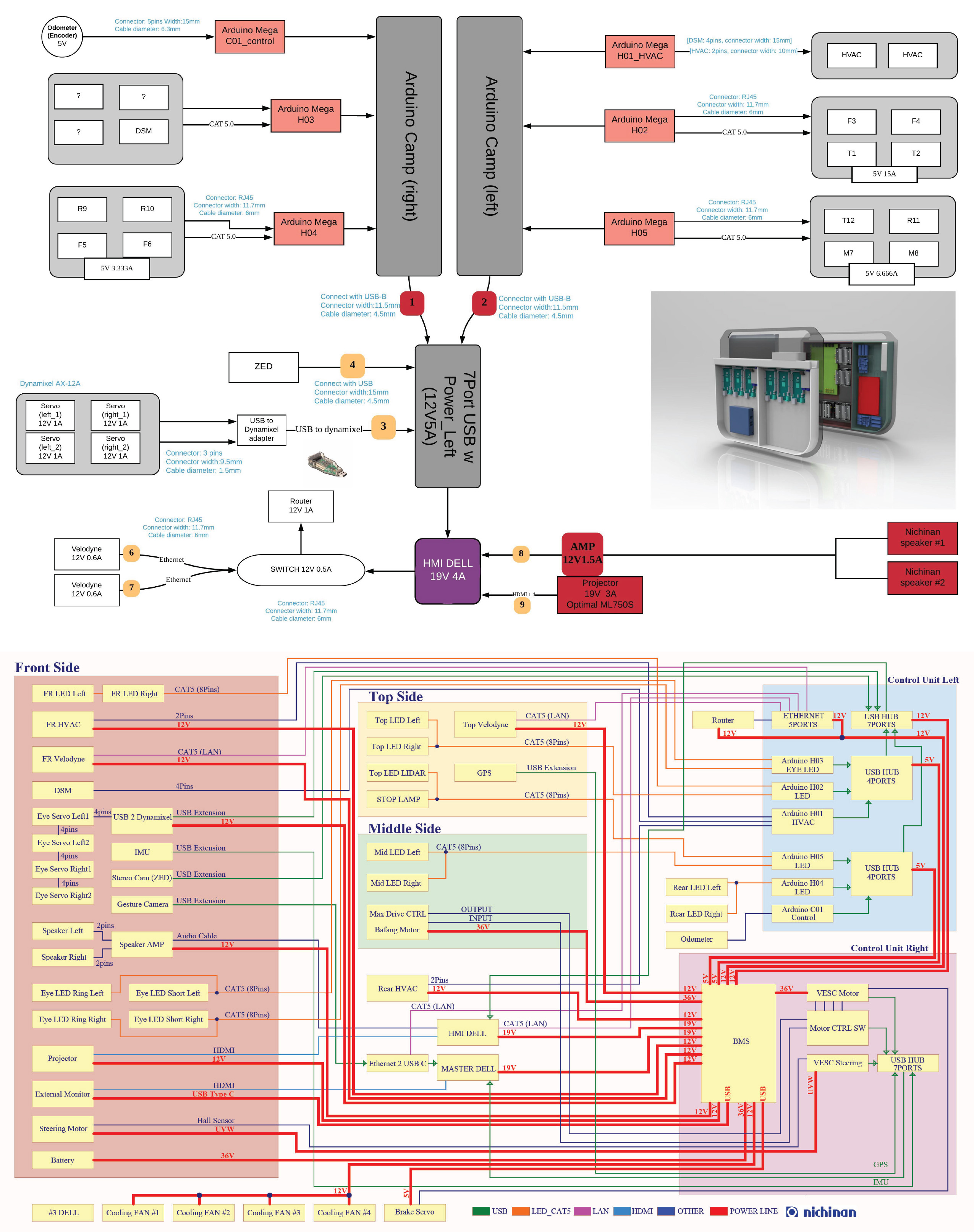

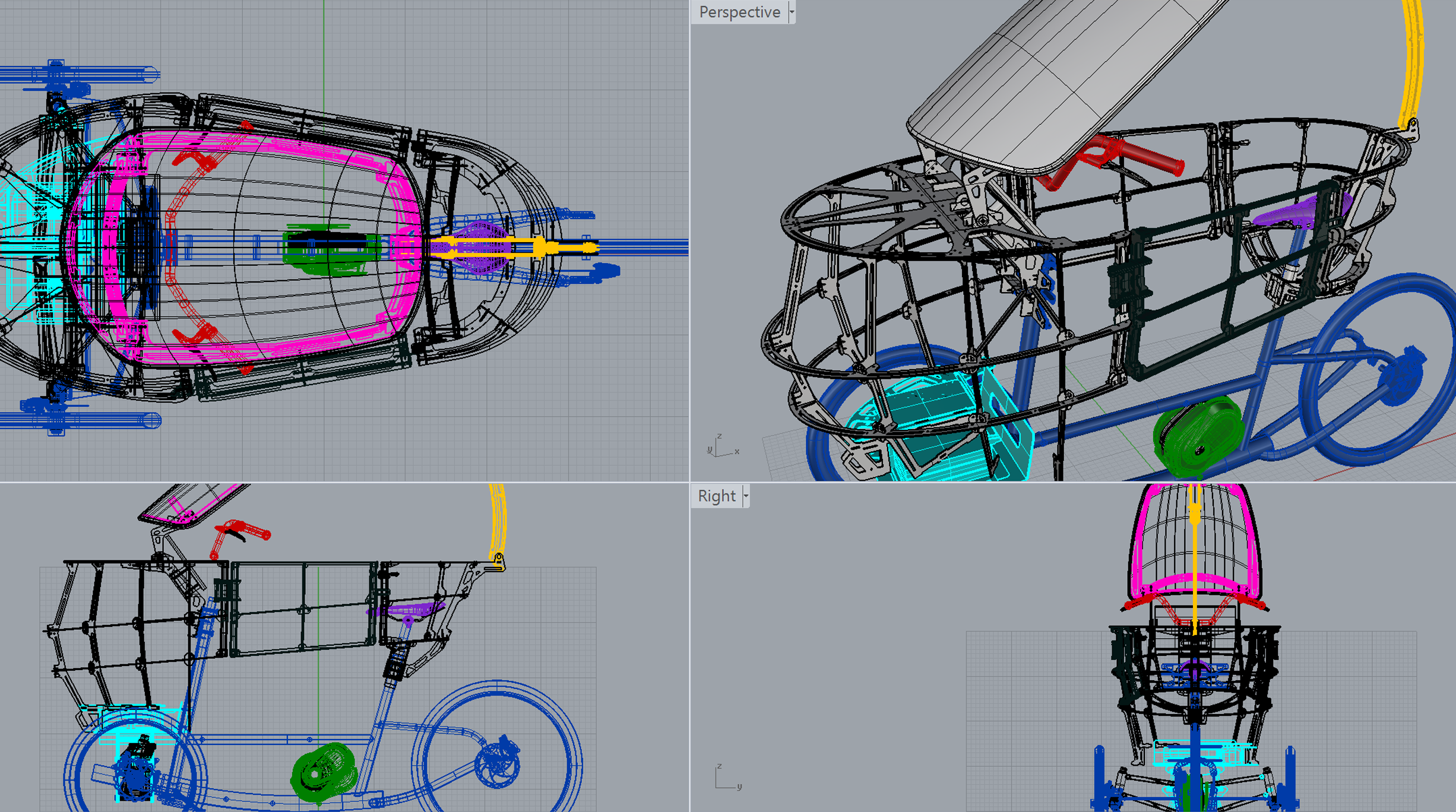

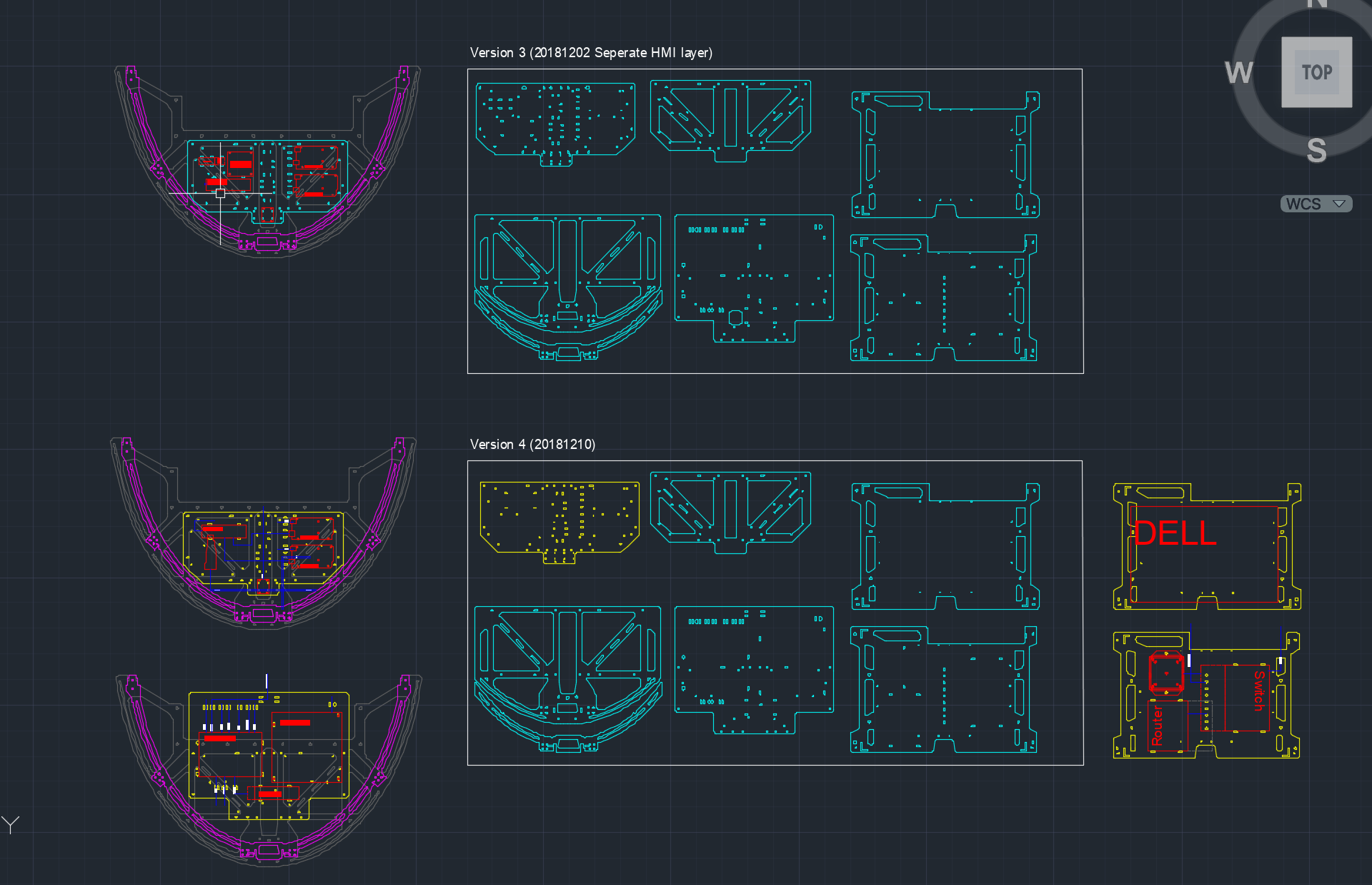

The sensors integration (LIDAR, GPS, Antenna, PCBs, battery, etc.) impacts the design of the product. I believe it is critical for the designers to understand the limitation of the hardware and sensors before diving into product design.

I start by laying out the essential components to learn the hardware limitation and move to CAD software (Rhino, SolidWorks, AutoCAD) to iterate the design, packaging the sensors with minimum space while keeping the agility. I also used the diagram and 3D model to communicate with the fabrication company and align on design specifications.

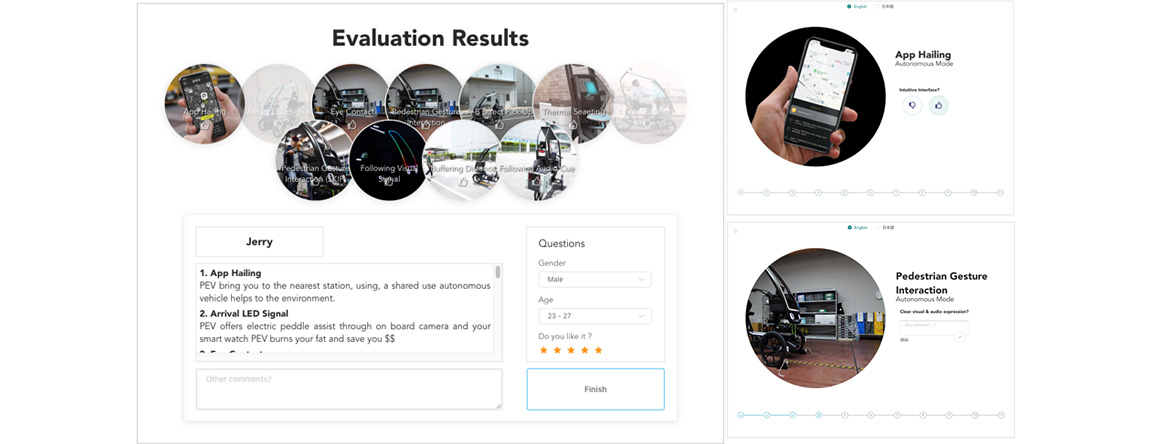

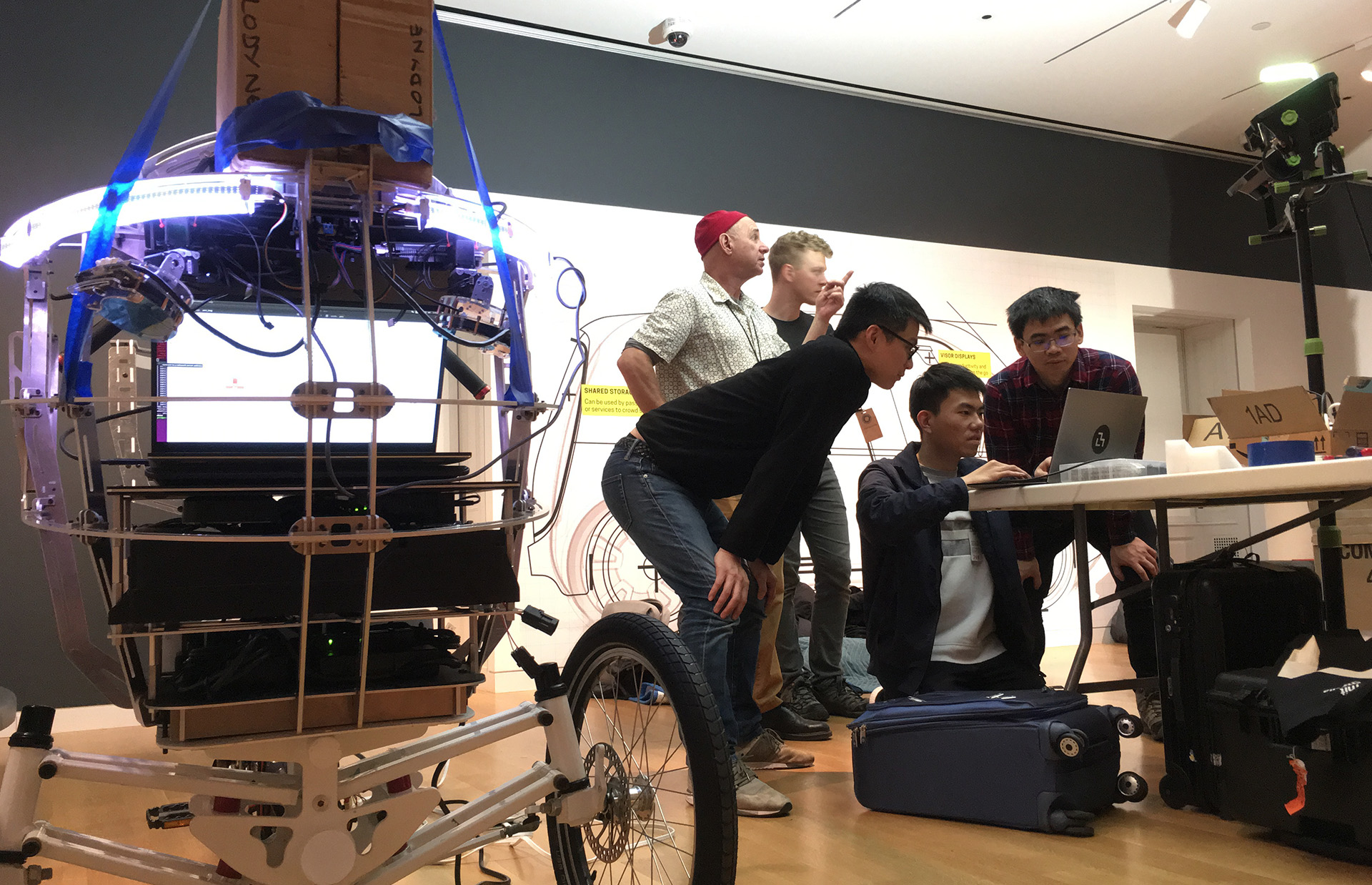

After building the prototype, we ran several user testings and human factor testing with our sponsor company. As we hope to deploy the PEV in the urban area someday, having feedback from industry experts is truly helpful to improve our design. Therefore, we invited 10 executives from various departments (R&D, design, engineering, and vehicle dynamics) of Denso Corporation, one of the largest automobile components manufacturing companies in the world, to test the V2P (Vehicle to Pedestrians) and V2R (vehicle to Rider) interactions that we designed for the PEVs.

After experiencing all of the interactions, we asked the participants to complete a post-study survey through a web platform, providing their feedback on these interactions and indicating how we should improve these interactions to deploy PEVs in the real world and interact with customers (riders).

This prototype and demo were successful, the PEV is now officially being produced and commercialzed by our sponsor company 🎉🎉! Our team enabled the lab to secure an additional $300,000 in research fundings from five sponsor companies across the world with this project. Stay tuned for more press release information from the MIT Media Lab website.

There are four different aluminum-built prototypes that our team made before the final version. This page shows the process of how we developed from hand-made prototype to a professional built product.

The PEV uses standard bicycle components and is lightweight yet robust. Its sensors are easy to reconfigure and it has a 250W mid-drive electric motor and 10Ah battery pack that provides 25 miles of travel per charge and a top

speed of 20 miles per hour. PEV's operate in bike lanes, avoiding the congestion and adding incentives to make more bikeable cities.

Learn from shared bikes

The PEV uses standard bicycle components and is lightweight yet robust. Its sensors are easy to reconfigure and it has a 250W mid-drive electric motor and 10Ah battery pack that provides 25 miles of travel per charge and a top speed of 20 miles per hour.

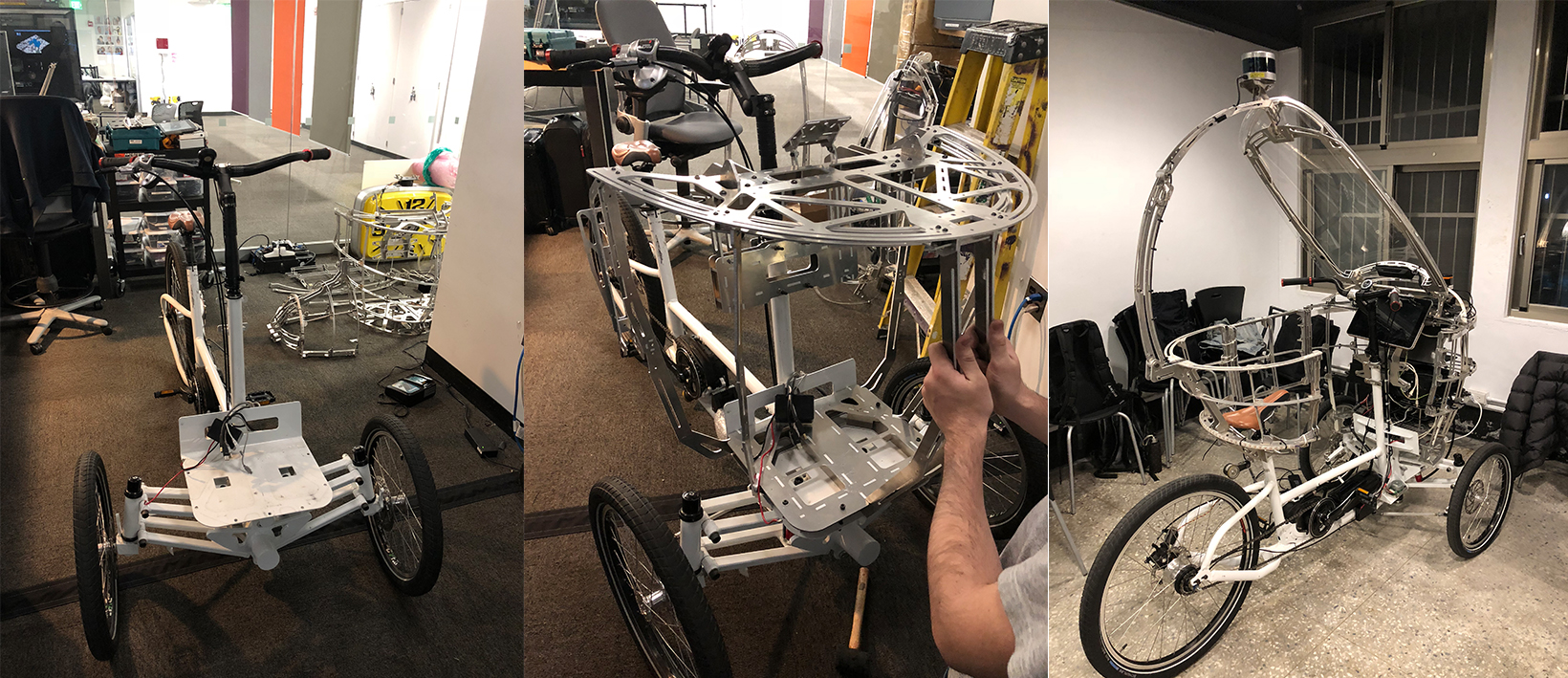

Aluminum made prototype

In order to rapidly test different design, we chose to use aluminum to build PEV's prototype. The aluminum sheets that we use are multipurpose 6061, it is lightweight yet robust while having the malleability for us to cut, bend or reassemble.

Bike linkage study, mid-drive motor study, steering motor study.

2D CAD file studying, 3D modeling, mechanical system design, hardware layout design.

Fabrication: Laser cut, water jet, CNC, 3D printing, hardware assemble, BOM.

Parts assemble, sensors testing, collaborate with engineering teams to test drive the vehicle.

The prototype(s) were tested in the United States, Taiwan, and Japan.

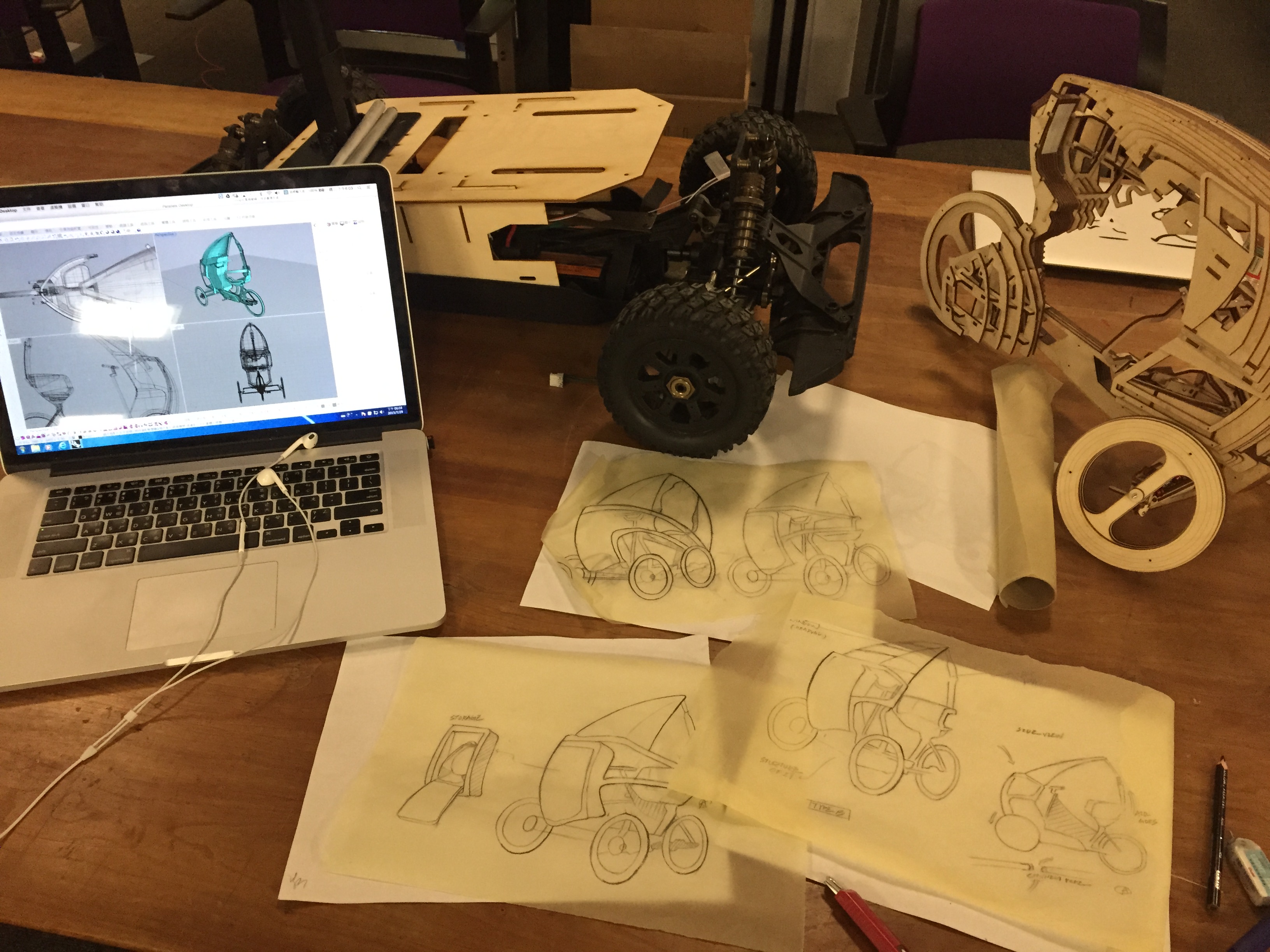

Testing, testing, and testing. Before getting to the final version. We have iterated four different prototypes. Each time we learned from users and stakeholders how we can improve and deliver the next generation.

The image was taken in MIT Media Lab's annual member's event where we showcased the latest two generations.

I lead the HMI design for the PEV. The principle for PEV's HMIs is to design human-centric interactions. Our team believes that if the autonomous vehicle is coming to the urban areas, it should be the vehicle that learns how to interact with people, not the other way around.

We usually start by researching the related HMI works, studying current driver-pedestrian communication patterns, and proposing design solutions to translate these experiences into HMIs that allow the vehicle to interact with humans.

However, it is hard to describe hardware UX to the users without letting them experience the products. Therefore, we would quickly build low-fidelity prototypes and work backward to test them with users, making several iterations based on user's feedback. In this section I will showcase how we developed and tested the HMIs on the older PEV prototypes.

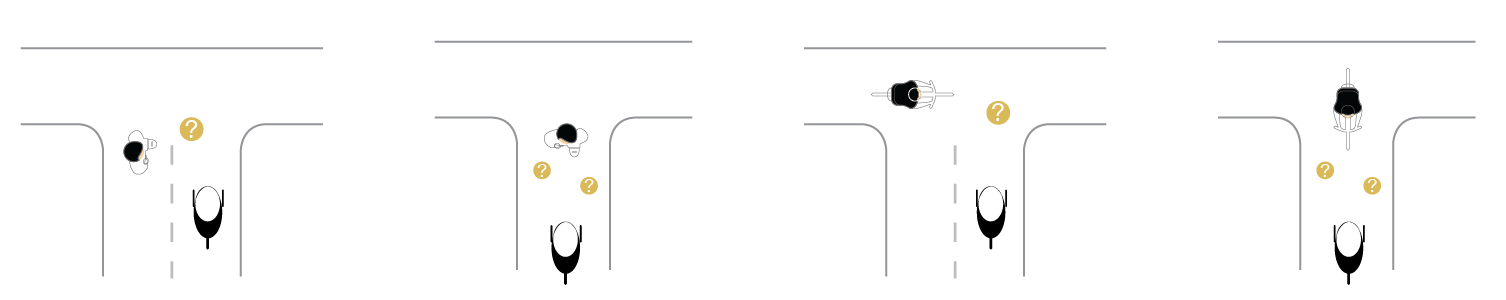

How to mutually convey the intention between PEV and the pedestrian?

When PEV (lightweight autonomous vehicle) is deployed in the city, it is critical to design an intuitive interaction that allows the pedestrians to understand the intention of the vehicle as there's no longer a driver in the vehicle to communicate with.

For instance, if a pedestrian comes across with PEV at the intersection of the street, how does PEV signal the pedestrian that it's safe to pass? What could the pedestrian do to interact with the vehicle more intuitively and seamlessly?

Eye contact

In places frequently used by pedestrians and cyclists, the right-of-way is typically dynamic and negotiated through tacit interactions, beginning with individual acknowledgment of the presence of others. Using a webcam and computer vision techniques, the PEV uses eye contact to acknowledge its surrounding individuals as a way to establish a basic level of trust, and to and initiate communication and potential negotiation.

Emotional detection

Understanding of human emotions is expected to be a key enabler for sustainable cohabitation between humans and machines in the future. Using a webcam and computer vision, the PEV determines the basic emotional state of its human collaborator, which can potentially enable the future PEV to interact with people in a natural, sociable and intuitive manner.

Object detection

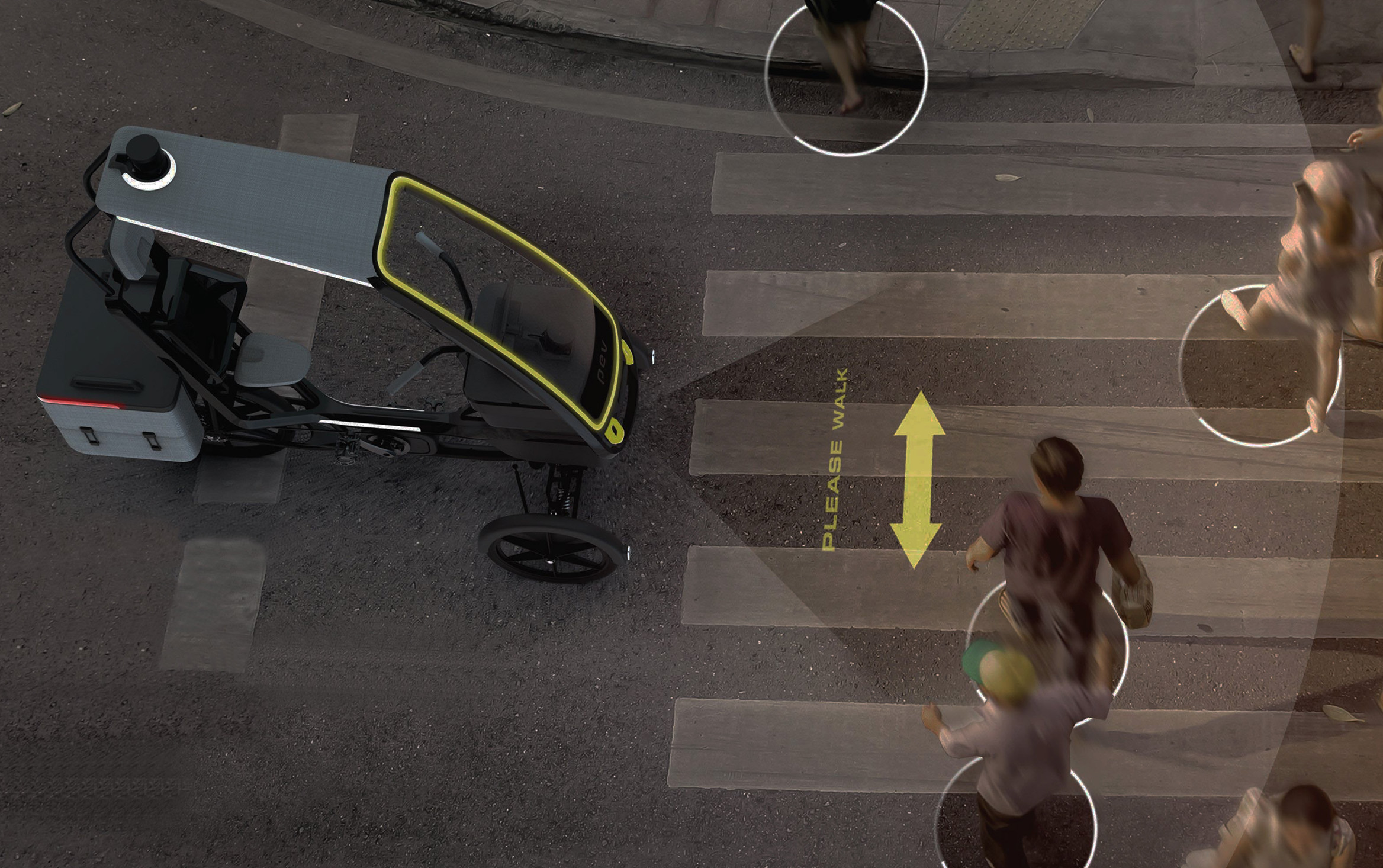

Locational awareness of people and objects surrounding the vehicle is critical to the safety and navigation of autonomous robots. The PEV uses LiDAR to maintain a live view of its surroundings and to determine its path and prevent collisions.

In this project,our team designs a pair of mechanical eyes as PEV's headlights that will point towards the pedestrians and signal them that it's safe for them to pass. The eyes can also detect emotions of people and create "eye contact" with them, initializing potential conversation between human and machines.

We utilize projection mapping technique to visualize how a robot perceives the world. The PEV detects human presence and projects a ring on the floor while it's late at night, raising the pedestrians' sense of security while interacting with new mobility platforms.

This is prototyped using ROS and Python. The LIDAR data that shown in the video is how PEV (a robot) perceives the world. The inflated blue circles represents the obstacles. We extract the center points of the obstacles from LiDAR's data and visualize it with projection, mapping with the obstacle in the real world. It also works with multi users.

The Projection could also be used to express the intention of the vehicle. In the future we will develop more personalized projection and use it to express the emotions of the vehicle.

The vision system on PEV also enables pedestrian to use posture as an input to interact with PEV.

The video showcase how the headlights interact with the riders.

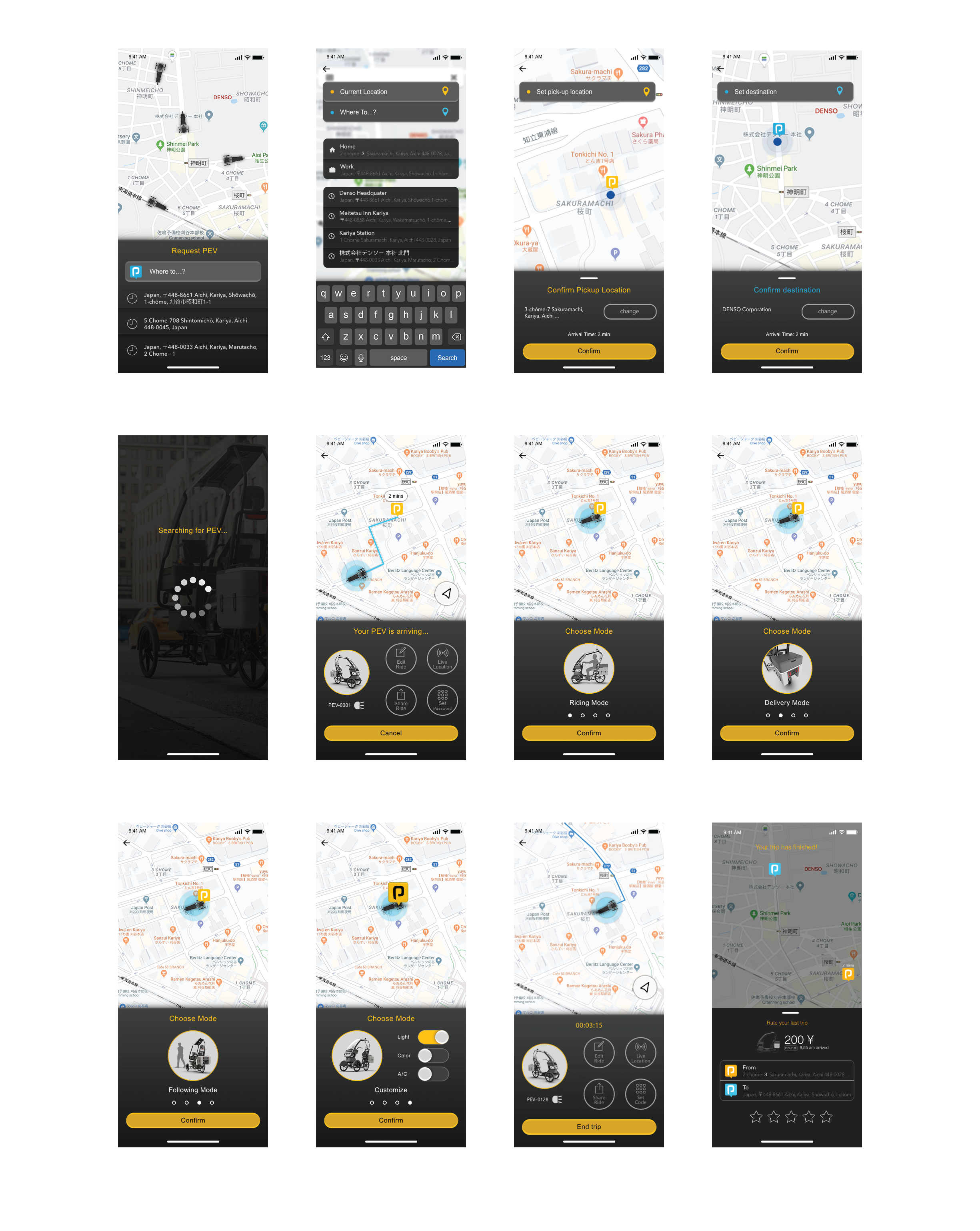

The Following Mode utilizes Ultra Wideband sensor and allows PEV to follow the users, helping them carry groceries or bags. Watch the video (live demo) and the mobile app wireframe for more details.

Releated HMI works, Car driver and pedestrian comminucation pattern study.

Build mechanical 3d model, hardware sensor layout study, software (ROS, python) scripting, LED color study.

Laser cut, water jet, hardware assemble, javascript pose detection interactive prototype, projection mapping and keystoning.

Collaborate with software teams and link the design system to ROS (node.js) to a production level.

All of the above HMIs were featured and showcased by the Cooper Hewitt Design Museum in New York. The visitors gave really good feedbacks on the mechanical eyes, mentioning that the eyes characterize PEV and make it more approachable.

However, some visitors mentioned that it is hard to see the light changes in the eyes during day time. To solve this problem, there should be a clearer mechanical movement in addition to the LED light. I will need to do more study on how car-driver communicates to the pedestrian (wave, head movement, etc.) and translate the human language to machine language, test it with more users to find out the most intuitive and direct means.

As this project has pending patents, I can only show part of the design here. Please contact me directly if you wish to learn more about it.

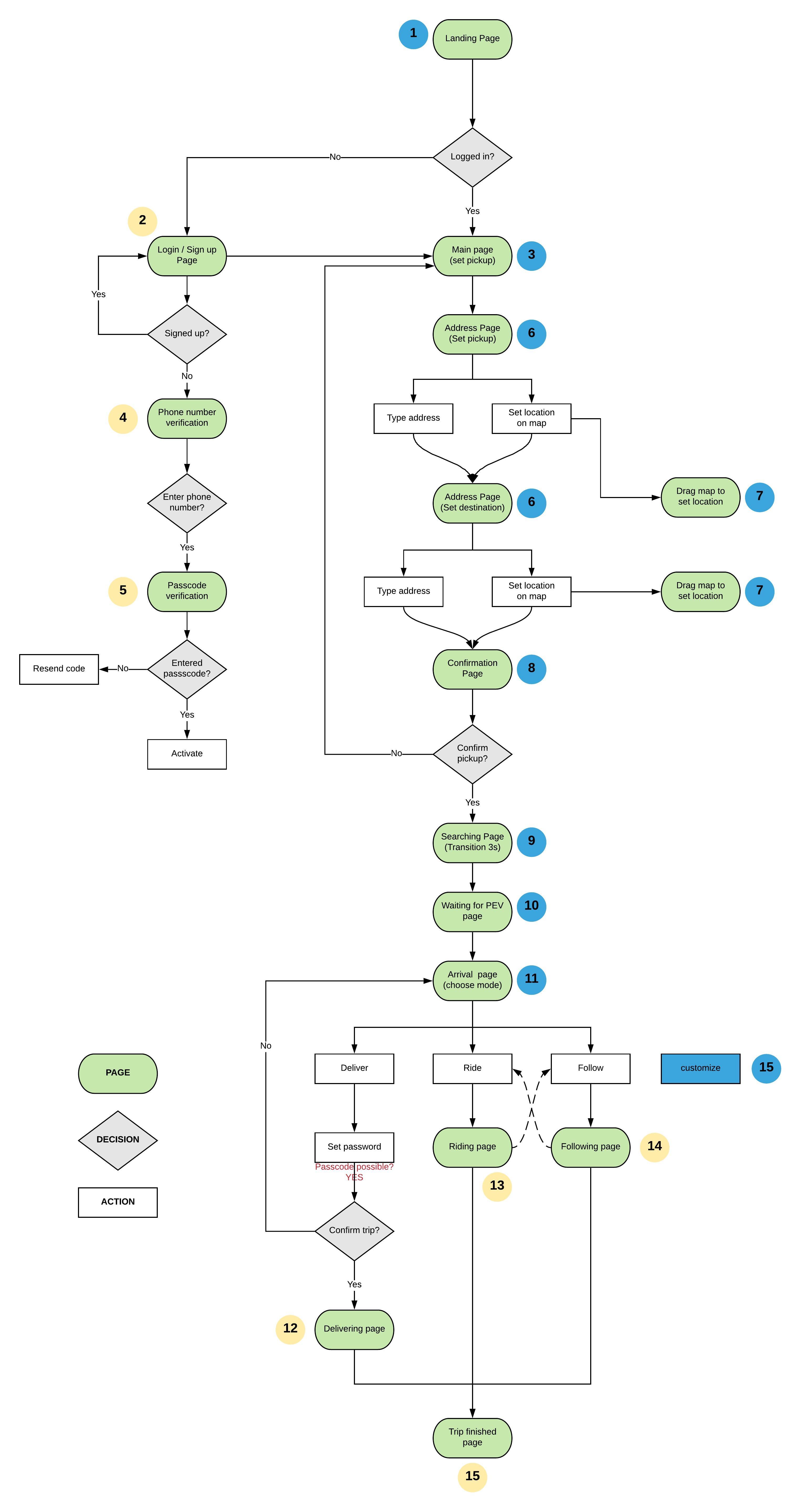

Context study, ride share apps research, story board, persona.

User journey map, low-fidelity paper prototyping, wireframe, typography, use cases, UX design.

HTML, CSS, Javascript, Sketch, Adobe XD, InVision.

Collaborate with software teams and link the design system to ROS (node.js).

The prototype(s) were tested in the United States, Taiwan, and Japan.